Domino can run on a Kubernetes cluster provided by the Azure Kubernetes Service.

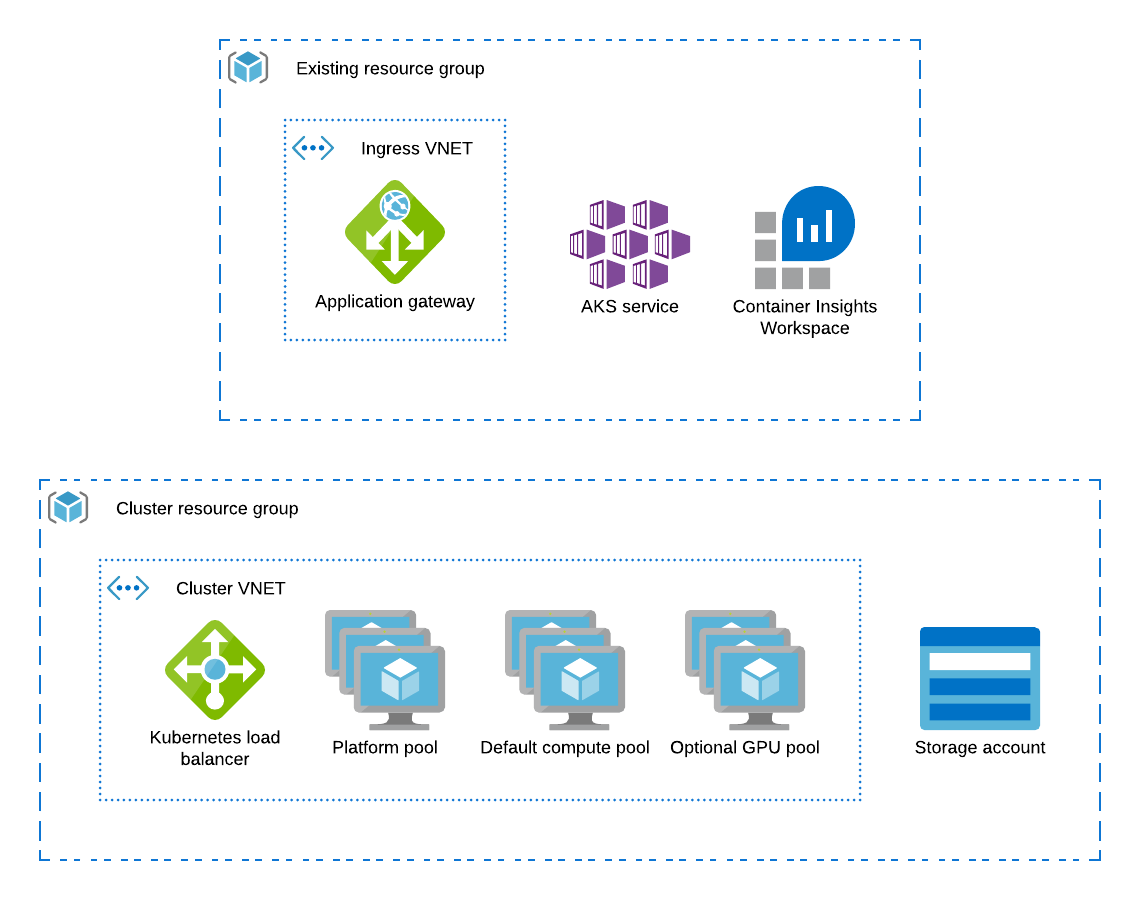

When running on AKS, the Domino architecture uses Azure resources to fulfill the Domino cluster requirements as follows:

-

For a complete Terraform module for Domino-compatible AKS provisioning, see terraform-azure-aks on GitHub.

-

The AKS control plane, with managed Kubernetes masters, handles Kubernetes control.

-

The AKS cluster’s default node pool is configured to host the Domino platform.

-

Additional AKS node pools provide compute nodes for user workloads.

-

When Domino is deployed in AKS, it is compatible with the

containerdruntime, which is the AKS default runtime for Kubernetes 1.19 and above. -

When using the

containerdruntime, the Azure Container Registry stores Domino images. -

An Azure storage account stores Domino blob data and datasets

-

The

kubernetes.io/azure-diskprovisioner creates persistent volumes for Domino executions. -

The Advanced Azure CNI is used for cluster networking, and Calico enforces network policy.

-

An SSL-terminating Application Gateway that points to a Kubernetes load balancer handles Ingress to the Domino application.

-

Domino recommends provisioning with Terraform for extended control and customizability of all resources. When you set up your Azure Terraform provider, add a

partner_idwith a value of31912fbf-f6dd-5176-bffb-0a01e8ac71f2to enable usage attribution.

This section describes how to configure an AKS cluster for use with Domino.

Resource groups

You can provision the cluster, storage, and application gateway in an existing resource group. When Azure creates the cluster, it will create a separate resource group that will contain the cluster components themselves.

Namespaces

You do not have to configure namespaces prior to install. Domino will create three namespaces in the cluster during installation, according to the following specifications:

| Namespace | Contains |

|---|---|

| Durable Domino application, metadata, platform services required for platform operation |

| Ephemeral Domino execution pods launched by user actions in the application |

| Domino installation metadata and secrets |

Node pools

The AKS cluster must have at least two node pools: platform (for platform nodes) and default (for compute nodes).

The cluster can also include a default-GPU node pool.

All node pools must contain worker nodes with the following specifications and distinct node labels:

| Pool | Min-Max | VM | Disk | Labels |

|---|---|---|---|---|

| 4-6 | Standard_DS5_v2 | 128G |

|

| 1-20 | Standard_DS4_v2 | 128G |

|

Optional: | 0-5 | Standard_NC6_v3 | 128G |

|

The recommended architecture creates the platform node pool by configuring the cluster’s initial default node pool.

See the following cluster Terraform resource for a complete example.

resource "azurerm_kubernetes_cluster" "aks" {

name = example_cluster

enable_pod_security_policy = false

location = "East US"

resource_group_name = "example_resource_group"

dns_prefix = "example_cluster"

private_cluster_enabled = false

default_node_pool {

enable_node_public_ip = false

name = "platform"

node_count = 4

node_labels = { "dominodatalab.com/node-pool" : "platform" }

vm_size = "Standard_DS5_v2"

availability_zones = ["1", "2", "3"]

max_pods = 250

os_disk_size_gb = 128

node_taints = []

enable_auto_scaling = true

min_count = 1

max_count = 4

}

network_profile {

load_balancer_sku = "Standard"

network_plugin = "azure"

network_policy = "calico"

dns_service_ip = "100.97.0.10"

docker_bridge_cidr = "172.17.0.1/16"

service_cidr = "100.97.0.0/16"

}

}You must add the default compute node pool after the cluster is created.

This is not the initial cluster default node pool, but a separate node pool named default.

It contains the default Domino compute nodes.

See the following node pool Terraform resource for a complete example.

resource "azurerm_kubernetes_cluster_node_pool" "aks" {

enable_node_public_ip = false

kubernetes_cluster_id = "example_cluster_id"

name = "default"

node_count = 1

vm_size = "Standard_DS4_v2"

availability_zones = ["1", "2", "3"]

max_pods = 250

os_disk_size_gb = 128

os_type = "Linux"

node_labels = {

"domino/build-node" = "true"

"dominodatalab.com/build-node" = "true"

"dominodatalab.com/node-pool" = "default"

}

node_taints = []

enable_auto_scaling = true

min_count = 1

max_count = 20

}You can add node pools with distinct dominodatalab.com/node-pool labels to make other instance types available for Domino executions.

See Manage Compute Resources to learn how these different node types are referenced by label from the Domino application.

When you add GPU node pools, consider the Azure best practices on using GPU nodes in AKS.

Network plugin

The Domino-hosting cluster must use the Advanced Azure CNI with network policy enforcement by Calico.

See the following network_profile configuration example.

network_profile {

load_balancer_sku = "Standard"

network_plugin = "azure"

network_policy = "calico"

dns_service_ip = "100.97.0.10"

docker_bridge_cidr = "172.17.0.1/16"

service_cidr = "100.97.0.0/16"

}Dynamic block storage

AKS clusters come equipped with several kubernetes.io/azure-disk backed storage classes by default.

Domino requires use of premium disks for adequate input and output performance.

You can use the managed-premium class that is created by default.

Consult the following storage class specification as an example.

allowVolumeExpansion: true

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

labels:

kubernetes.io/cluster-service: "true"

name: managed-premium

selfLink: /apis/storage.k8s.io/v1/storageclasses/managed-premium

parameters:

cachingmode: ReadOnly

kind: Managed

storageaccounttype: Premium_LRS

reclaimPolicy: Delete

volumeBindingMode: ImmediatePersistent blob and data storage

Domino uses one Azure storage account for both blob data and files. See the following configuration for the two resources required, the storage account itself and a blob container inside the account.

resource "azurerm_storage_account" "domino" {

name = "example_storage_account"

resource_group_name = "example_resource_group"

location = "East US"

account_kind = "StorageV2"

account_tier = "Standard"

account_replication_type = "LRS"

access_tier = "Hot"

}

resource "azurerm_storage_container" "domino_registry" {

name = "docker"

storage_account_name = "example_storage_account"

container_access_type = "private"

}Record the names of these resources for use when you install Domino.

Domain

Domino must be configured to serve from a specific FQDN. To serve Domino securely over HTTPS, you need an SSL certificate that covers the chosen name. Record the FQDN for use when installing Domino.

|

Important

|

A Domino install can’t be hosted on a subdomain of another Domino install.

For example, if you have Domino deployed at data-science.example.com, you can’t deploy another instance of Domino at acme.data-science.example.com.

|

See the following example configuration file for the Installation Process based on the previous provisioning examples.

schema: '1.0'

name: domino-deployment

version: 4.1.9

hostname: domino.example.org

pod_cidr: '100.97.0.0/16'

ssl_enabled: true

ssl_redirect: true

request_resources: true

enable_network_policies: true

enable_pod_security_policies: true

create_restricted_pod_security_policy: true

namespaces:

platform:

name: domino-platform

annotations: {}

labels:

domino-platform: 'true'

compute:

name: domino-compute

annotations: {}

labels: {}

system:

name: domino-system

annotations: {}

labels: {}

ingress_controller:

create: true

gke_cluster_uuid: ''

storage_classes:

block:

create: false

name: managed-premium

type: azure-disk

access_modes:

- ReadWriteOnce

base_path: ''

default: false

shared:

create: true

name: dominoshared

type: azure-file

access_modes:

- ReadWriteMany

efs:

region: ''

filesystem_id: ''

nfs:

server: ''

mount_path: ''

mount_options: []

azure_file:

storage_account: ''

blob_storage:

projects:

type: shared

s3:

region: ''

bucket: ''

sse_kms_key_id: ''

azure:

account_name: ''

account_key: ''

container: ''

gcs:

bucket: ''

service_account_name: ''

project_name: ''

logs:

type: shared

s3:

region: ''

bucket: ''

sse_kms_key_id: ''

azure:

account_name: ''

account_key: ''

container: ''

gcs:

bucket: ''

service_account_name: ''

project_name: ''

backups:

type: shared

s3:

region: ''

bucket: ''

sse_kms_key_id: ''

azure:

account_name: ''

account_key: ''

container: ''

gcs:

bucket: ''

service_account_name: ''

project_name: ''

default:

type: shared

s3:

region: ''

bucket: ''

sse_kms_key_id: ''

azure:

account_name: ''

account_key: ''

container: ''

gcs:

bucket: ''

service_account_name: ''

project_name: ''

enabled: true

autoscaler:

enabled: false

cloud_provider: azure

groups:

- name: ''

min_size: 0

max_size: 0

aws:

region: ''

azure:

resource_group: ''

subscription_id: ''

spotinst_controller:

enabled: false

token: ''

account: ''

external_dns:

enabled: false

provider: aws

domain_filters: []

zone_id_filters: []

git:

storage_class: managed-premium

email_notifications:

enabled: false

server: smtp.customer.org

port: 465

encryption: ssl

from_address: domino@customer.org

authentication:

username: ''

password: ''

monitoring:

prometheus_metrics: true

newrelic:

apm: false

infrastructure: false

license_key: ''

helm:

tiller_image: gcr.io/kubernetes-helm/tiller

appr_registry: quay.io

appr_insecure: false

appr_username: '$QUAY_USERNAME'

appr_password: '$QUAY_PASSWORD'

private_docker_registry:

server: quay.io

username: '$QUAY_USERNAME'

password: '$QUAY_PASSWORD'

internal_docker_registry:

s3_override:

region: ''

bucket: ''

sse_kms_key_id: ''

gcs_override:

bucket: ''

service_account_name: ''

project_name: ''

azure_blobs_override:

account_name: 'example_storage_account'

account_key: 'example_storage_account_key'

container: 'docker'

telemetry:

intercom:

enabled: false

mixpanel:

enabled: false

token: ''

gpu:

enabled: false

fleetcommand:

enabled: false

api_token: ''

teleport_kube_agent:

enabled: false

proxyAddr: teleport-domino.example.org:443

authToken: TOKEN