You can always modify the contents of a Dataset or rename the Dataset.

|

Tip

| Always create a snapshot before modifying the contents of a Dataset so that you can always return to the previous version of the data. |

Delete a Dataset

If you no longer need the entire Dataset, you can mark it for deletion. When you mark a Dataset for deletion, it removes the Dataset and its associated snapshots from the originating Project and from all projects that it was shared with. Domino executions will not be able to use the Dataset. A Domino administrator must perform the final deletion.

-

In the navigation pane, click Data, then click the name of the Dataset to delete.

-

Click Delete Dataset.

-

Click Delete Dataset to confirm that you want to mark the Dataset for deletion. Your administrator will permanently delete the Dataset.

Add or remove files

You can add or delete files in a Dataset using the Domino UI. With the CLI, you can add all the files in a folder to a Dataset.

In the navigation pane, click Data, then click the name of the Dataset to change.

-

To add files, click Upload files.

-

To delete files, select the files to delete, then click Delete Selected Items.

-

To rename the Dataset, click Rename Dataset, enter the new name, then click Rename.

Before deleting a file with a special character like a backslash (\) in its name, you need to rename it first. You can use a tilde (~) or colon (:) anywhere in a filename, except at the beginning. If the file that you want to delete has a tilde or colon at the beginning of its name, rename it.

Rename files and folders

You can change the name of the latest version of a file or folder in a Dataset. Domino also does not rename files or folders in snapshots.

|

Warning

| You must update references to the original file or folder. If you don’t, your Project might not work. For example, you might see inconsistencies in text files and documentation. |

-

Go to a Project that uses a Dataset.

-

In the navigation pane, click Data.

-

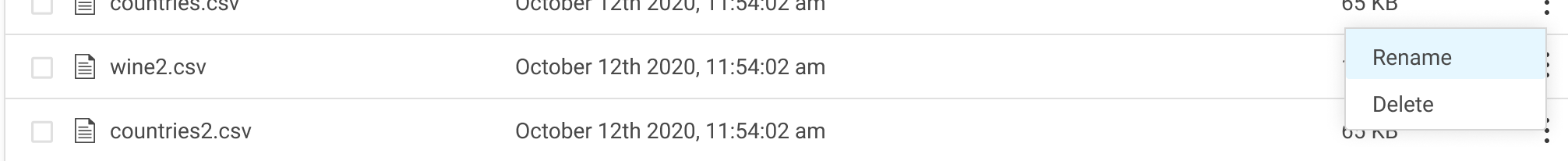

To rename the file or folder, go to the end of the row and click the three vertical dots.

-

Click Rename.

-

In the Rename window, enter the New Name and click Rename.

If you have data in an external source from which you want to periodically fetch and load into Domino, you can set up scheduled jobs to write to Datasets.

Suppose you have data stored in an external Data Source that is periodically updated. If you wanted to fetch the latest state of that file once a week and load it into a Domino Dataset, you could set up a scheduled Run:

-

Create a Dataset to store the data from the external source.

-

Write a script that fetches the data and writes it to the Dataset.

-

Create a scheduled Job to run your script with the new Dataset configuration.

The following is a detailed example showing how to fetch a large, dynamic data file from a private S3 bucket with a scheduled Run once a week.

-

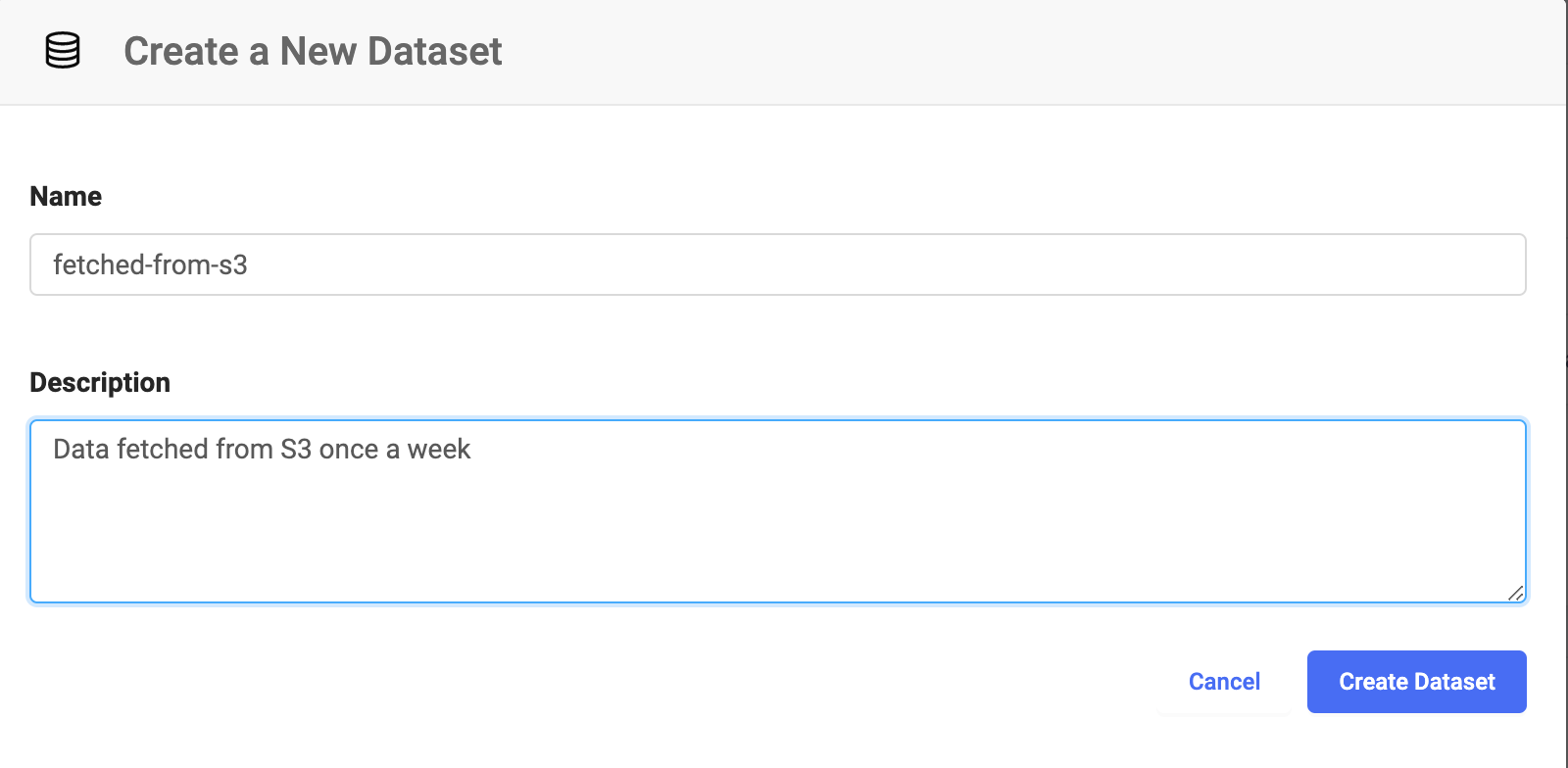

Create a Dataset to hold the file. This example shows the Dataset named

fetched-from-s3.

For this example, assume the S3 bucket is named

my_bucketand the file you want is namedsome_data.csv. You can set up your script like this:fetch-data.pyimport boto3 import io # Create new S3 client client = boto3.client('s3') # Download some_data.csv from my_bucket and write to latest-S3 output mount file = client.download_file('my_bucket', 'some_data.csv', '/domino/datasets/fetched-from-s3/some_data.csv') -

Set up a scheduled Job that executes this script once a week with the correct Dataset configuration.